Age & Sex recognition from facial images (2015-2016)

This project is part of my thesis for Master of Science in Applied Mathematics and the work I have done at Center of “Computer vision and semantic analysis of images” at Moscow Institute of Electronic Technology. I will cover only the Sex recognition part, as it covers the most interesting perspective on the past approach to the computer vision problems.

Sex recognition

Problem setting

Learn a binary classifier $f: \mathcal{X} \rightarrow \mathcal{Y}$, where targets are $\mathcal{Y} = {0 (female), 1 (male)}$ and inputs are images $\mathcal{X} = \mathbb{R}^{w\times h}$.

Classifier on Pixel Comparisons and AdaBoost

The classifier on Pixel Comparisons and AdaBoost has been presented by authors from Google at AAAI-2005 [1], which is related to Viola-Jones framework.

- [1] Baluja, S., Rowley, H.A.: Boosting sex identification performance, pp. 1508–1513. AAAI Press, Menlo Park (2005)

First, we need to define weak classifiers $h(x)$. The classifiers are called weak as they do not have real discriminative power as it is almost coin flip. Specifically in that paper authors use simple comparision that defined as follows:

\(h(x)= \begin{cases}1 & c( pixel_{i}, pixel_{j})=1 \\ 0 & c( pixel_{i}, pixel_{j})=0\end{cases}\),

where $c(pixel_i, pixel_j)$ is a function that compares $pixel_i$ with $pixel_j$. The authors used five types of comparisions $| pixel_i - pixel_j| > p$, where $p=0,5,1,25,50$.

While the weak classifiers do not have discriminative power, they can be used to construct a stronger classifier. For example, by considering a weighted sum of $T$ weak classifiers. To construct such strong classifier one can use AdaBoost that greedily constructs combination of weak classifiers $h_t(x)$:

\(S(x)= \begin{cases}1, & \sum_{t=1}^{T} \log (\frac{1-e_t}{e_{t}}) h_{t}(x) \geq \frac{1}{2} \sum_{t=1}^{T} \log (\frac{1-e_t}{e_{t}}) \\ 0, & otherwise\end{cases}\).

Each iteration the algorithm selects a new weak classifier that minimizes total weighted error $e_{t} = \sum^N_{n=0} w_{t,n} |h_t(x_n) - y_n|$ where $w_{t,n}$ is a sample class probability. The $w_{t,n}$ are adjusted each iteration with respect to the error $e_{t}$. It is done by penalizing weights of samples that are missclassified and initialized as 0.5 in the beggining for both classes.

The number of possible weak classifiers is $O(n^{2})$, where $n$ is the number of pixels. For example, it equals $1595000$ for all possible pairs of pixels of the $20 \times 20$ image considering $5$ types of pixels comparisons and their inverses.

Strengths

- The inference process is fast even with CPU

- Easy to implement on hardware such as DSP

Limitations

- Does not scale well with respect to number of pixels $O(n^{2})$ and sample size as current Deep Learning methods

The implementation of the training algorithm in C++ can be found by this link on GitHub. I have added a trick to reduce the training time by randomly selecting only the subset of weak classifiers in each step of AdaBoost without sacrificing the performance.

Classifier with Convolutional Neural Networks

I will not explain convolutional neural networks (CNN’s) here as there are many valuable resources as Stanford CS231n course. I will provide hyperparameters of the CNNs for comparison with AdaBoost. I used two versions of CNN’s based on VGG architecture (with one and two VGG blocks). Let:

- BLOCK(i,x) = Conv2D(i,x,3) + BN + ReLU + Conv2D(x,x,3) + BN + ReLU + MaxPool(2,2)];

- FCN(o) = Linear(o, 128) + DP*(0.5) + Linear(o, 128) + DP*(0.5) + Linear(o, 2) + Sigmoid,

where o is the output size of the last BLOCK and the symbol * means optional dropout.

- Architecture #1: BLOCK(1,32) + FCN(o)

- Architecture #2: BLOCK(1,32) + BLOCK(32,64) + + FCN(o)

The CrossEntropy and SGD (lr=0.01).

Results

| Approach | Accuracy | Time (ms) |

|---|---|---|

| AdaBoost and pixel comparisons (1000 weak classifiers) | 86.2% | 0.03 ( i7-4510U CPU @ 2.00GHz) |

| Architecture #1 | 86.5% | 6 (GPU GF GTX 660) |

| Architecture #1 + DP | 90.9% | 6 (GPU GF GTX660) |

| Architecture #2 | 89.7% | 6 (GPU GF GTX 660) |

| Architecture #2 + DP | 90.5% | 6 (GPU GF GTX 660) |

Examples

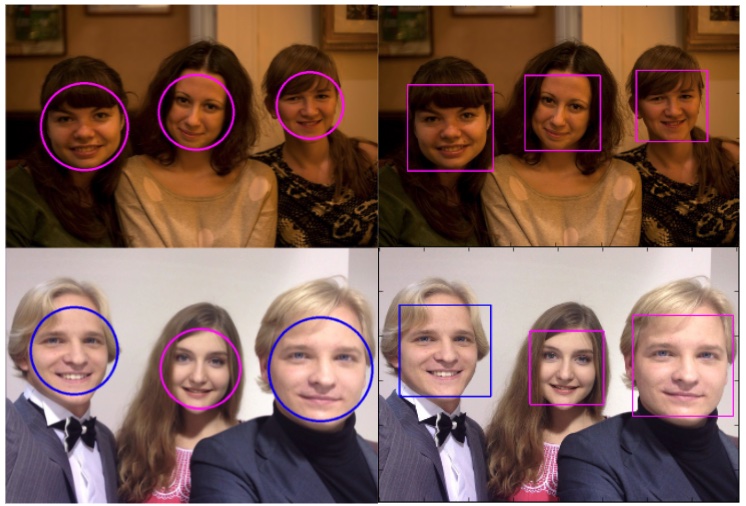

These are predictions in the wild with photos of my friends. The left results (circles) are with AdaBoost and the right (squares) with best-performing CNNs. The blue color is for Male, and the pink is for the Female.

P.s. There is a missclassification for female instead of male by the CNNs in the second photo.